Deploy your containerized AI applications with nvidia-docker

[ad_1]

Much more and additional merchandise and expert services are using advantage of the modeling and prediction abilities of AI. This short article presents the nvidia-docker instrument for integrating AI (Artificial Intelligence) software program bricks into a microservice architecture. The most important advantage explored right here is the use of the host system’s GPU (Graphical Processing Device) means to accelerate various containerized AI applications.

To recognize the usefulness of nvidia-docker, we will start out by describing what sort of AI can reward from GPU acceleration. Secondly we will present how to apply the nvidia-docker instrument. Last but not least, we will explain what applications are available to use GPU acceleration in your purposes and how to use them.

Why applying GPUs in AI apps?

In the industry of artificial intelligence, we have two most important subfields that are utilised: device mastering and deep discovering. The latter is aspect of a larger loved ones of device understanding solutions primarily based on artificial neural networks.

In the context of deep understanding, exactly where operations are in essence matrix multiplications, GPUs are far more successful than CPUs (Central Processing Units). This is why the use of GPUs has developed in modern yrs. Indeed, GPUs are regarded as as the heart of deep finding out due to the fact of their massively parallel architecture.

Nevertheless, GPUs cannot execute just any program. Indeed, they use a specific language (CUDA for NVIDIA) to get benefit of their architecture. So, how to use and talk with GPUs from your applications?

The NVIDIA CUDA technological know-how

NVIDIA CUDA (Compute Unified Machine Architecture) is a parallel computing architecture blended with an API for programming GPUs. CUDA translates application code into an instruction established that GPUs can execute.

A CUDA SDK and libraries these as cuBLAS (Essential Linear Algebra Subroutines) and cuDNN (Deep Neural Network) have been developed to communicate effortlessly and competently with a GPU. CUDA is available in C, C++ and Fortran. There are wrappers for other languages such as Java, Python and R. For case in point, deep finding out libraries like TensorFlow and Keras are dependent on these systems.

Why utilizing nvidia-docker?

Nvidia-docker addresses the desires of builders who want to incorporate AI functionality to their purposes, containerize them and deploy them on servers driven by NVIDIA GPUs.

The goal is to established up an architecture that lets the growth and deployment of deep discovering models in companies available by means of an API. So, the utilization price of GPU sources is optimized by generating them available to several application circumstances.

In addition, we reward from the pros of containerized environments:

- Isolation of occasions of just about every AI model.

- Colocation of a number of models with their certain dependencies.

- Colocation of the very same product less than several variations.

- Reliable deployment of types.

- Model general performance monitoring.

Natively, employing a GPU in a container calls for installing CUDA in the container and providing privileges to entry the device. With this in mind, the nvidia-docker software has been created, permitting NVIDIA GPU devices to be uncovered in containers in an isolated and secure fashion.

At the time of producing this posting, the hottest version of nvidia-docker is v2. This model differs tremendously from v1 in the subsequent means:

- Model 1: Nvidia-docker is carried out as an overlay to Docker. That is, to develop the container you experienced to use nvidia-docker (Ex:

nvidia-docker run ...) which performs the steps (amongst some others the creation of volumes) allowing to see the GPU units in the container. - Model 2: The deployment is simplified with the alternative of Docker volumes by the use of Docker runtimes. In fact, to launch a container, it is now vital to use the NVIDIA runtime by using Docker (Ex:

docker run --runtime nvidia ...)

Notice that thanks to their various architecture, the two versions are not suitable. An application penned in v1 should be rewritten for v2.

Location up nvidia-docker

The essential features to use nvidia-docker are:

- A container runtime.

- An accessible GPU.

- The NVIDIA Container Toolkit (major section of nvidia-docker).

Conditions

Docker

A container runtime is needed to operate the NVIDIA Container Toolkit. Docker is the advised runtime, but Podman and containerd are also supported.

The official documentation presents the set up course of action of Docker.

Driver NVIDIA

Motorists are essential to use a GPU product. In the circumstance of NVIDIA GPUs, the drivers corresponding to a offered OS can be acquired from the NVIDIA driver down load site, by filling in the information on the GPU product.

The installation of the drivers is carried out by way of the executable. For Linux, use the pursuing instructions by changing the title of the downloaded file:

chmod +x NVIDIA-Linux-x86_64-470.94.run

./NVIDIA-Linux-x86_64-470.94.runReboot the host device at the close of the set up to acquire into account the mounted motorists.

Installing nvidia-docker

Nvidia-docker is obtainable on the GitHub venture site. To install it, follow the installation guide depending on your server and architecture details.

We now have an infrastructure that enables us to have isolated environments offering accessibility to GPU assets. To use GPU acceleration in purposes, quite a few tools have been produced by NVIDIA (non-exhaustive record):

- CUDA Toolkit: a established of equipment for acquiring program/courses that can conduct computations utilizing both of those CPU, RAM, and GPU. It can be made use of on x86, Arm and Electricity platforms.

- NVIDIA cuDNN](https://developer.nvidia.com/cudnn): a library of primitives to speed up deep studying networks and enhance GPU efficiency for big frameworks this kind of as Tensorflow and Keras.

- NVIDIA cuBLAS: a library of GPU accelerated linear algebra subroutines.

By applying these tools in application code, AI and linear algebra jobs are accelerated. With the GPUs now obvious, the application is capable to send the details and operations to be processed on the GPU.

The CUDA Toolkit is the lowest level choice. It provides the most manage (memory and recommendations) to establish custom made applications. Libraries give an abstraction of CUDA operation. They allow you to focus on the application enhancement alternatively than the CUDA implementation.

At the time all these aspects are executed, the architecture working with the nvidia-docker support is completely ready to use.

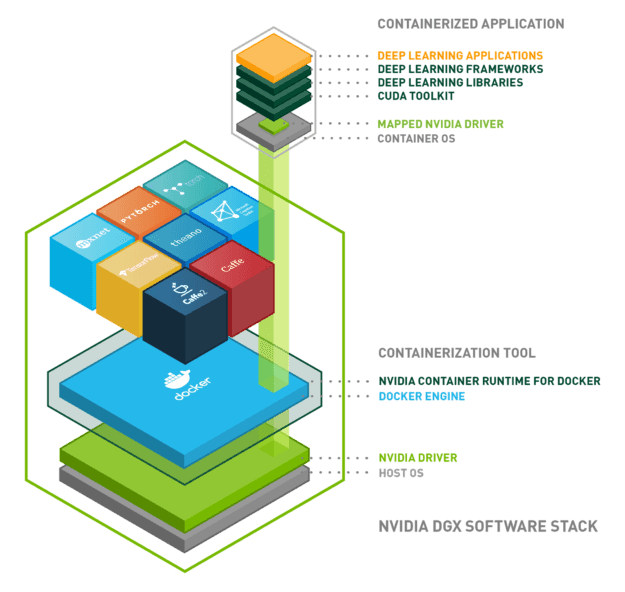

Here is a diagram to summarize every little thing we have noticed:

Conclusion

We have established up an architecture letting the use of GPU assets from our programs in isolated environments. To summarize, the architecture is composed of the next bricks:

- Functioning program: Linux, Home windows …

- Docker: isolation of the surroundings employing Linux containers

- NVIDIA driver: set up of the driver for the hardware in question

- NVIDIA container runtime: orchestration of the former three

- Applications on Docker container:

- CUDA

- cuDNN

- cuBLAS

- Tensorflow/Keras

NVIDIA carries on to build tools and libraries about AI technologies, with the aim of developing alone as a chief. Other systems may well enhance nvidia-docker or may well be much more appropriate than nvidia-docker based on the use situation.

[ad_2]

Resource backlink